Chinmay G. Pandit is the Digital Director of OnLabor and a student at Harvard Law School.

In this edition of Tech@Work: Illinois’s highest court considers whether federal collective bargaining law preempts BIPA; the EEOC publishes a new plan to enforce nondiscrimination laws against AI hiring technology; and working professionals discover the wonders — and dangers — of ChatGPT.

Illinois’s Highest Court Considers Federal Preemption of BIPA

In January, the Illinois Supreme Court heard oral arguments in the case of Walton v. Roosevelt University to consider whether federal collective bargaining law preempts state biometric privacy claims. William Walton, a former safety department employee at Roosevelt University, filed a lawsuit alleging that the university violated Illinois’ Biometric Information Privacy Act (BIPA) by collecting and storing Walton’s handprint data through its daily employee clock-in-clock-out system without following the statutorily-mandated notice and consent procedures. BIPA, which has been at the center of a wave of litigation in recent years, lays out strict notice, disclosure, and disposal requirements for employers collecting employees’ biometric information such as hand-, finger-, and voiceprints. The statute also provides aggrieved workers with a private right of action to sue violating employers.

Roosevelt University initially moved to dismiss the claim, arguing that Walton was disqualified from asserting an individual BIPA claim under the Labor Management Relations Act (LMRA) due to his membership in a collective bargaining unit. Though the trial court denied Roosevelt’s motion, the appellate court subsequently reversed, holding that, under the LMRA, union members waive their individual bargaining rights to change employer timekeeping procedures, even when those procedures encompass biometric data collection. Any effort to renegotiate the timekeeping system, the school argued, must be led by Service Employees International Union Local 1, Walton’s exclusive bargaining agent.

In oral arguments, the Illinois Supreme Court justices examined the relationship between federal collective bargaining law and BIPA, asking the parties whether the bargaining agreements expressly obviated BIPA claims and whether the existence of federal collective bargaining law absolves employers of their duty to comply with state law. The court’s decision will both shape and clarify the limits of state BIPA claims, which have expanded in scope over the past half-decade.

The EEOC Takes Aim at AI-Drive Biases

The Equal Employment Opportunity Commission (EEOC) published a Draft Strategic Enforcement Plan (SEP) in January seeking public comment as the commission turns its attention to the use of artificial intelligence (AI) tools in workplace hiring practices. For the first time since 1978, the EEOC has formally weighed in on hiring technology as 79% of employers now utilize AI in their recruitment and talent selection process, including to screen resumes, evaluate recorded interviews using camera sensors, and assess personality tests, for example. The concern, according to the agency, is that these automated tools may run afoul of nondiscrimination laws by filtering candidates — intentionally or otherwise — based on protected characteristics like race, age, gender, and more. By explicitly calling out technologically driven biases, the EEOC has signaled to employers that they will be held accountable for discriminatory hiring practices, even those arising from poorly designed algorithms.

The SEP’s publication comes after the EEOC filed its first lawsuit related to AI-driven discrimination last year, alleging that an English-language tutoring service, iTutorGroup, designed its online recruitment software to reject older applicants due solely to their age. The SEP also builds off the commission’s previously-issued guidance that employers are responsible for inspecting and ensuring that their AI tools comply with the Americans with Disabilities Act. New York City has also been active in this space, implementing a set of first-of-its-kind laws to regulate the use of AI in hiring and mandate annual “bias audits” of employers’ hiring tactics.

New York City’s legislation will serve an important model for Congress as the EEOC ramps up its nondiscrimination enforcement. As of now, federal law does not require companies to disclose the AI technology they use. As such, the EEOC will likely face difficulties in identifying AI-driven discriminatory practices, particularly when the biases are not obvious to employees. In the case of iTutorGroup, for example, the alleged discrimination came to light only when previously rejected candidates received job offers after re-submitting copies of their applications with modified birthdates. To identify less overt biases, Congress will likely need to implement an AI disclosure requirement for employers.

ChatGPT Gains Momentum Among Working Professionals

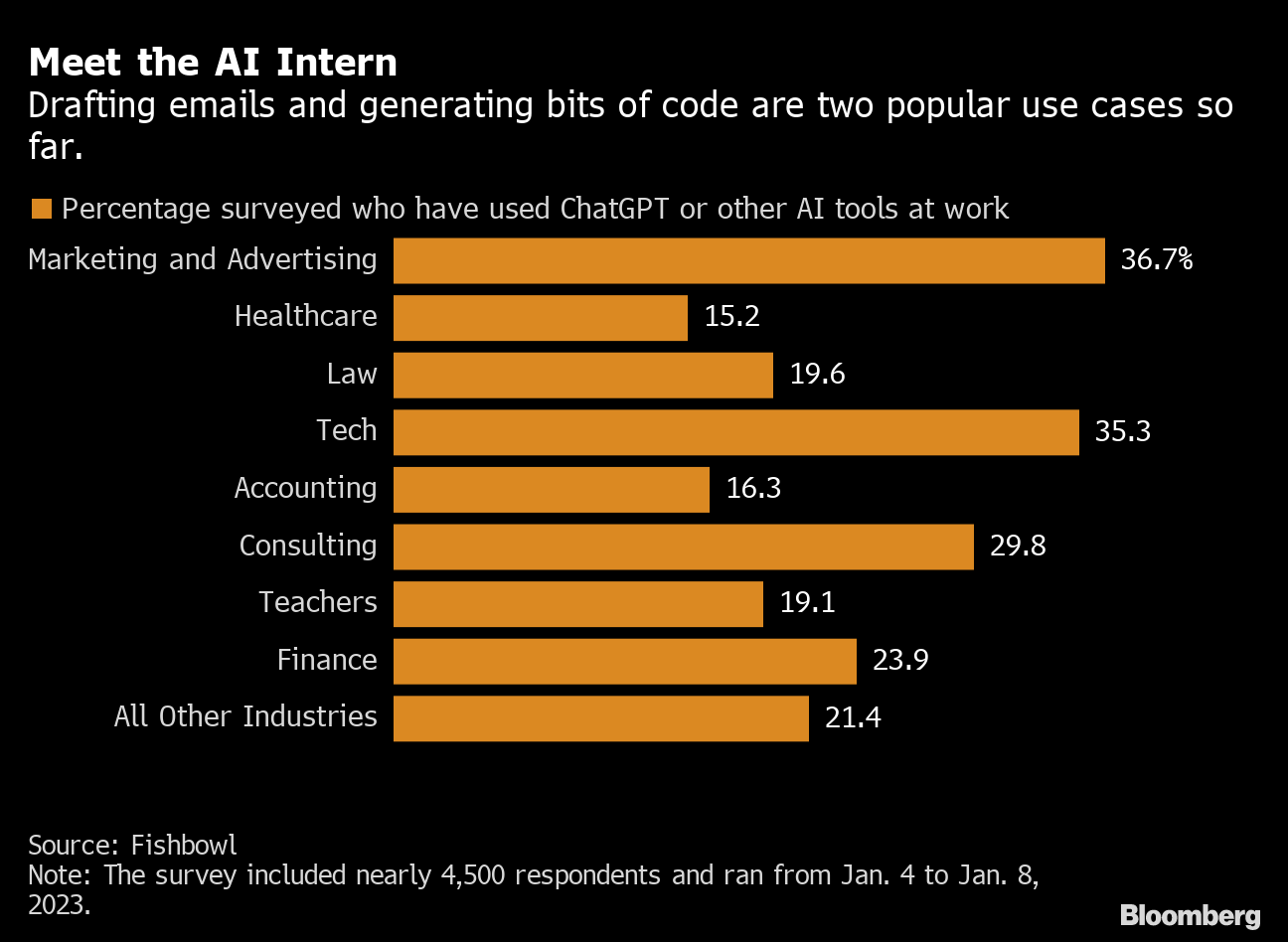

ChatGPT, the popular generative AI chatbot developed by OpenAI, appears to have established a foothold in the workplace. A recent survey revealed that nearly 30% of professionals have used ChatGPT — a tool that uses publicly-available information to instantly produce sophisticated responses to prompts — in the course of their day-to-day work. The 4,500 workers interviewed represented companies such as JPMorgan, Google, Amazon, and Meta, and reported that they used the technology to “draft emails, generate ideas, write and troubleshoot bits of code and summarize research or meeting notes.” Marketing, technology, and consulting workers have used the technology at the highest rate thus far.

Several corporate leaders are hopeful that implementing ChatGPT or an equivalent technology into daily practices will boost efficiency and allow employees to focus on higher-level responsibilities. As a result, Microsoft, a major investor in Open AI, is integrating ChatGPT into its service packages, enabling business clients to seamlessly incorporate the technology into their existing infrastructure, thus, as Microsoft’s CEO claims, “helping people do more with less.”

However, despite the excitement around the technology’s potential benefits, critics worry about the technology’s accuracy, which has been shown to be unreliable on several occasions. Additionally, observers worry that, without the proper oversight, the AI tool could jeopardize employer’s sensitive information or lead to inadvertent copyright infringement. Notably, in the education context, New York City public schools have already banned students from using ChatGPT out of concern of students using the program to cheat on assignments.

Daily News & Commentary

Start your day with our roundup of the latest labor developments. See all

February 13

Sex workers in Nevada fight to become the nation’s first to unionize; industry groups push NLRB to establish a more business-friendly test for independent contractor status; and UFCW launches an anti-AI price setting in grocery store campaign.

February 12

Teamsters sue UPS over buyout program; flight attendants and pilots call for leadership change at American Airlines; and Argentina considers major labor reforms despite forceful opposition.

February 11

Hollywood begins negotiations for a new labor agreement with writers and actors; the EEOC launches an investigation into Nike’s DEI programs and potential discrimination against white workers; and Mayor Mamdani circulates a memo regarding the city’s Economic Development Corporation.

February 10

San Francisco teachers walk out; NLRB reverses course on SpaceX; NYC nurses secure tentative agreements.

February 9

FTC argues DEI is anticompetitive collusion, Supreme Court may decide scope of exception to forced arbitration, NJ pauses ABC test rule.

February 8

The Second Circuit rejects a constitutional challenge to the NLRB, pharmacy and lab technicians join a California healthcare strike, and the EEOC defends a single better-paid worker standard in Equal Pay Act suits.